Safe Local Motion Planning with Self-Supervised Freespace Forecasting

CVPR 2021

| Peiyun Hu1 | Aaron Huang1 | John Dolan1 | David Held1 | Deva Ramanan1,2 |

|---|

2Argo AI

| Peiyun Hu1 | Aaron Huang1 | John Dolan1 | David Held1 | Deva Ramanan1,2 |

|---|

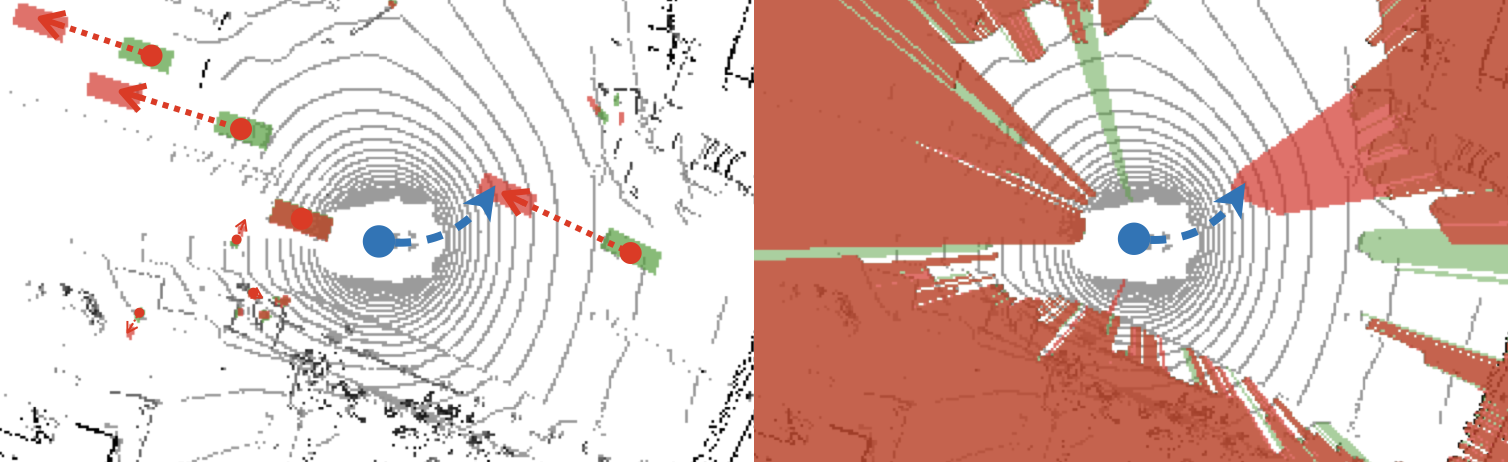

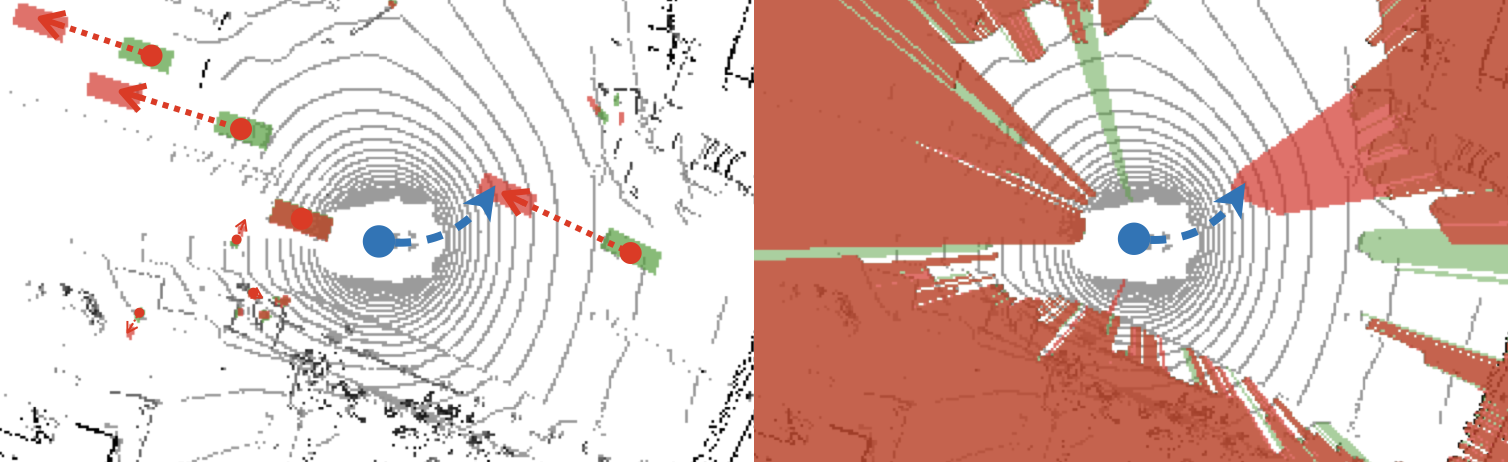

Safe local motion planning for autonomous driving in dynamic environments requires forecasting how the scene evolves. Practical autonomy stacks adopt a semantic object-centric representation of a dynamic scene and build object detection, tracking, and prediction modules to solve forecasting. However, training these modules comes at an enormous human cost of manually annotated objects across frames. In this work, we explore future freespace as an alternative representation to support motion planning. Our key intuition is that it is important to avoid straying into occupied space regardless of what is occupying it. Importantly, computing ground-truth future freespace is annotation-free. First, we explore freespace forecasting as a self-supervised learning task. We then demonstrate how to use forecasted freespace to identify collision-prone plans from off-the-shelf motion planners. Finally, we propose future freespace as an additional source of annotation-free supervision. We demonstrate how to integrate such supervision into the learning-based planners. Experimental results on nuScenes and CARLA suggest both approaches lead to a significant reduction in collision rates.

This work was supported by the CMU Argo AI Center for Autonomous Vehicle Research.